Repository

- Entity Relationship Diagram

- Table Description

- Data Sources

- Data Destinations

- How to Use Template

- Authorizing Data Sources

- Authorizing Data Destinations

- Most Common Errors

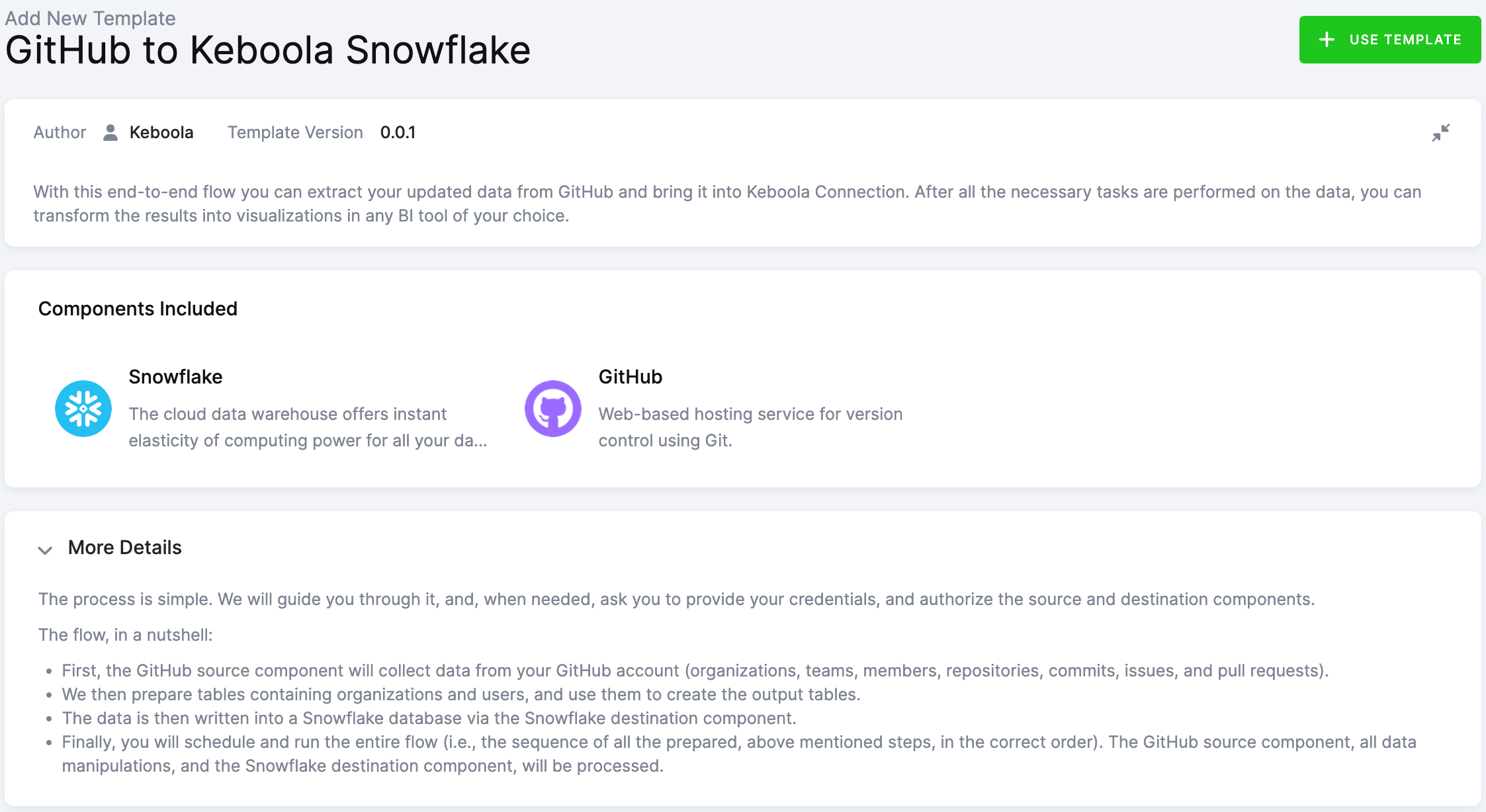

With this end-to-end flow you can extract your updated data from your repository tool (GitHub) and bring it into Keboola. After all the necessary tasks are performed on the data, you can transform the results into visualizations in any BI tool of your choice.

By using our repository template, you will get an overview of your repositories and the activity in them.

The flow, in a nutshell:

-

First, the GitHub data source connector will collect data from your GitHub account (organizations, teams, members, repositories, commits, issues, and pull requests).

-

We will then prepare tables containing organizations and users, and use them to create the output tables.

-

The data will be written into a Google BigQuery database, Snowflake database or Google sheet via a data destination connector.

-

Finally, you will schedule and run the entire flow (i.e., the sequence of all the prepared, above mentioned steps, in the correct order). The GitHub data source connector, all data manipulations, and the data destination connector, will be processed.

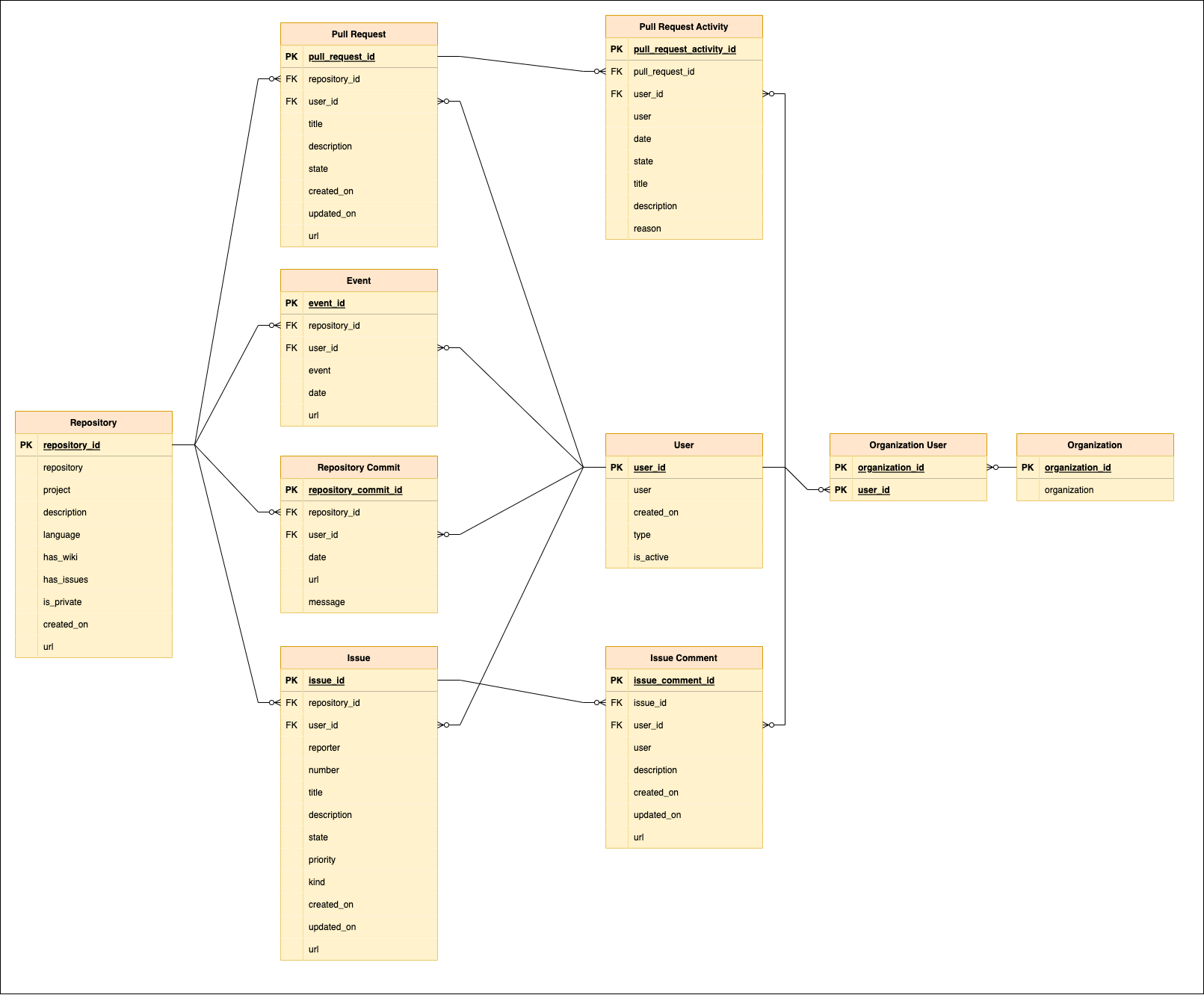

Entity Relationship Diagram

An entity-relationship diagram is a specialized graphic that illustrates the relationships between entities in a data destination.

Table Description

| Name | Description |

|---|---|

| EVENT | repository events |

| ISSUE | issues, their repository, user, and priority; other details |

| ISSUE COMMENT | comments for each issue |

| ORGANIZATION | list of organizations |

| ORGANIZATION USER | data on organizations and users combined |

| PULL REQUEST | list of pull-request commits and information on who created them and when |

| PULL REQUEST ACTIVITY | data on pull-request activities |

| REPOSITORY | list of repositories and information on them, such as privacy, issues, wiki, etc. |

| REPOSITORY COMMIT | list of repository commits and information on who created them and when |

| USER | list of users |

Data Sources

These data sources are available in Public Beta:

Data Destinations

These data destinations are available in Public Beta:

How to Use Template

The process is simple. We will guide you through it, and, when needed, ask you to provide your credentials and authorize the data destination connector.

First decide which data source and which data destination you want to use. Then select the corresponding template from the Templates tab in your Keboola project. When you are done, click + Use Template.

This page contains information about the template. Click + Use Template again.

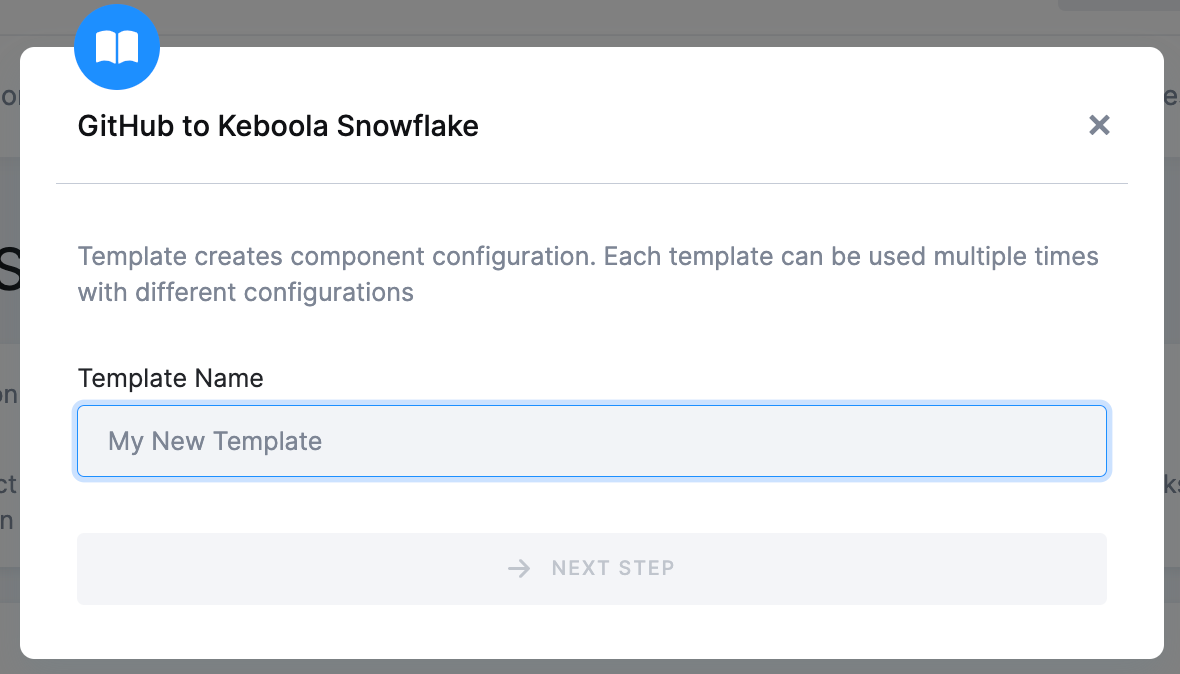

You’ll be asked to write a name for the template instance you are about to create. You can use the template as many times as you want and still keep everything organized.

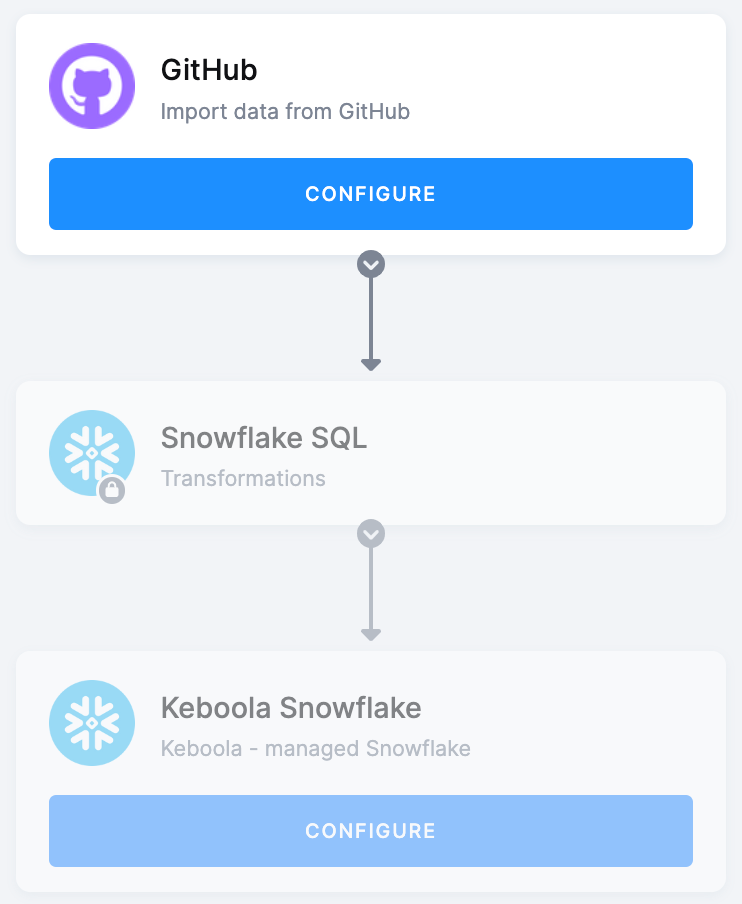

After clicking Next Step, you will see the template builder. Fill in all needed credentials and perform the required OAuth authorizations.

Important: Make sure to follow all the steps very carefully to prevent the newly created flow from failing because of any user authorization problems. If you are struggling with this part, go to the section Authorizing Data Destinations below.

Follow the steps one by one and authorize at least one data source from the list. Finally, the destination must be authorized as well.

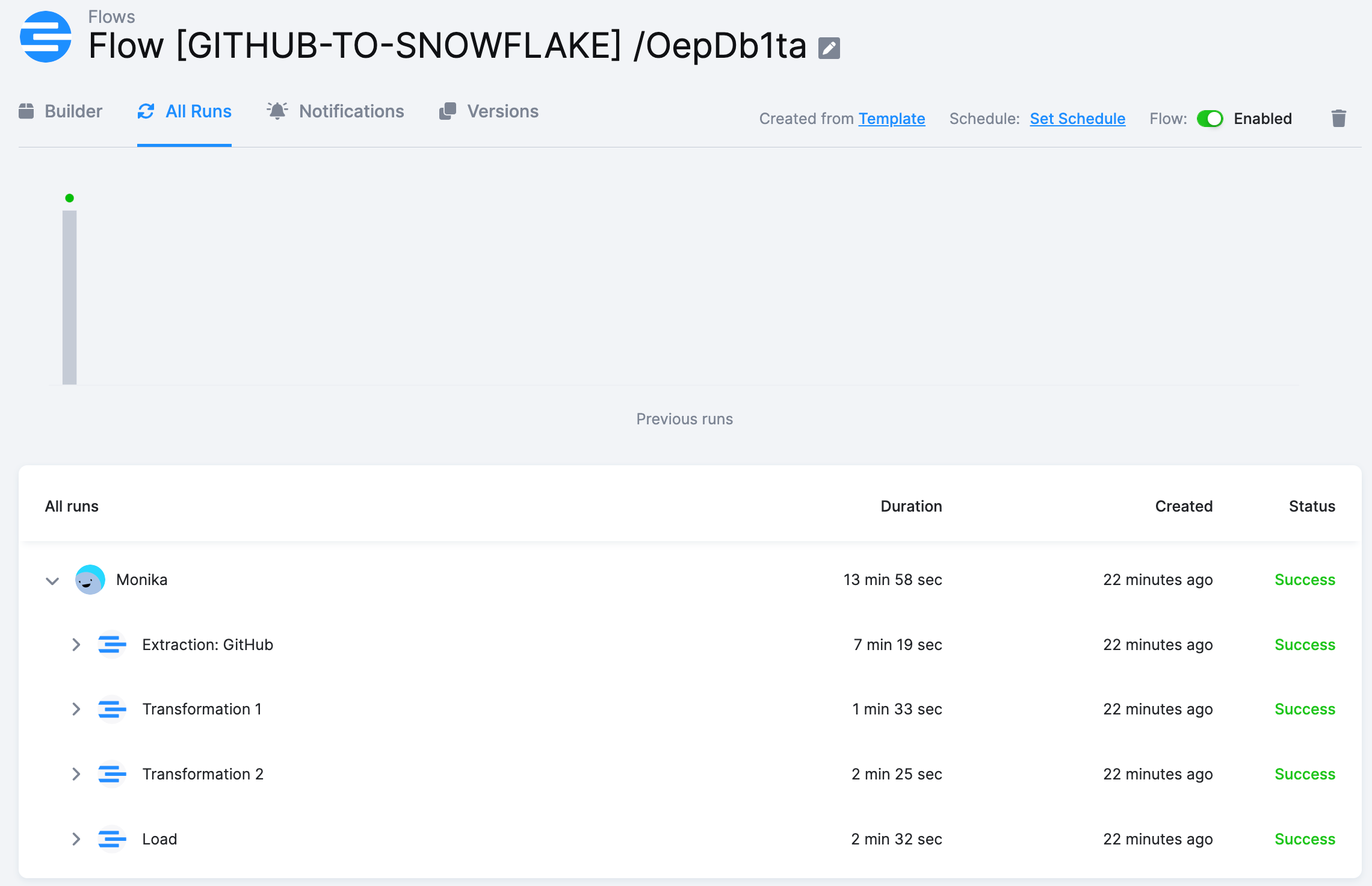

When you are finished, click Save in the top right corner. The template builder will create your new configuration, and when it is done, you will see the newly created flow.

Click Run Template and start building your visualizations a few minutes later.

Authorizing Data Sources

To use a selected data source connector, you must first authorize the data source.

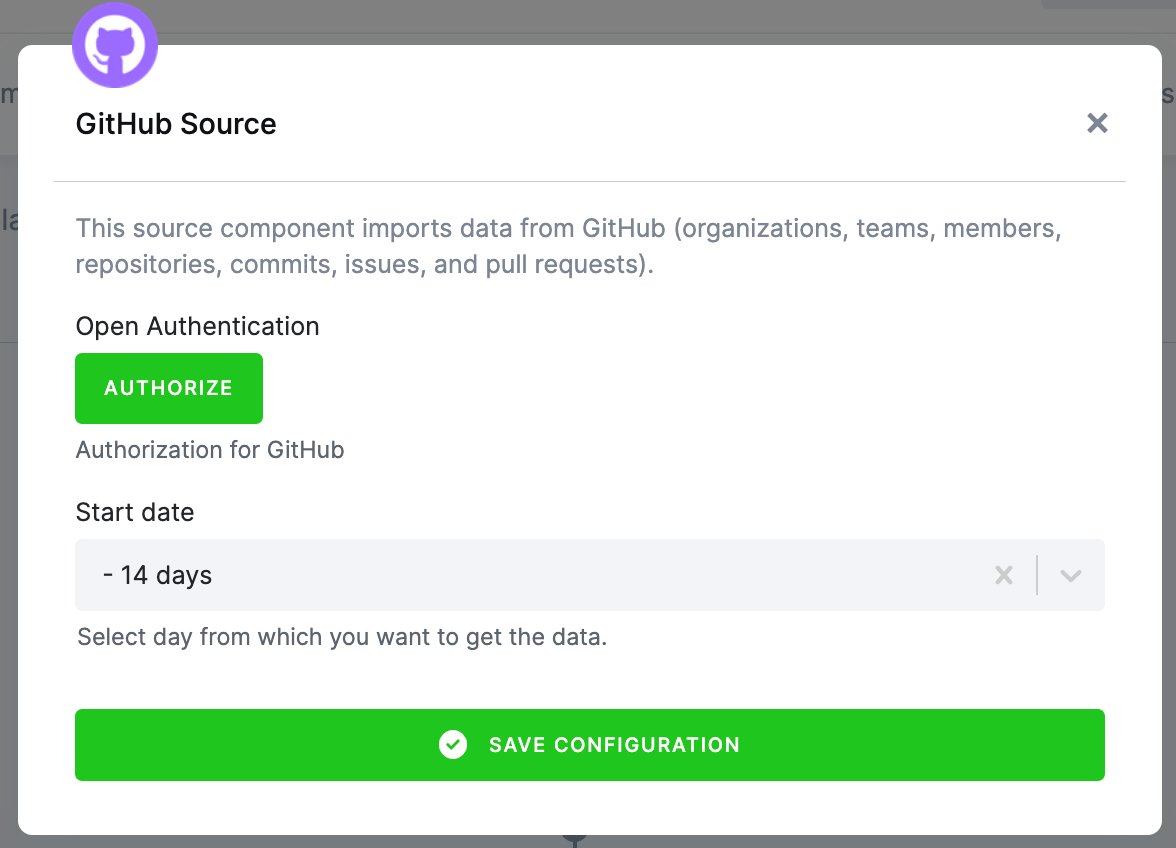

GitHub

Authorize your GitHub account and then select the period for extracting data.

Authorizing Data Destinations

To create a working flow, you must select at least one data destination.

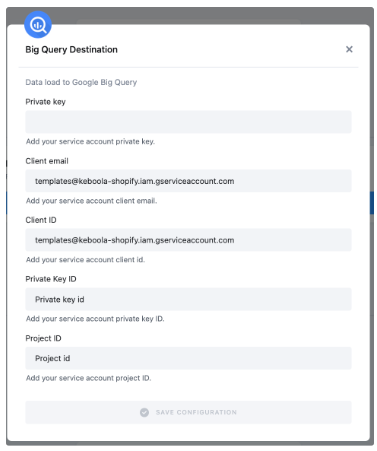

BigQuery Database

To configure the data destination connector, you need to set up a Google Service Account and create a new JSON key.

A detailed guide is available here.

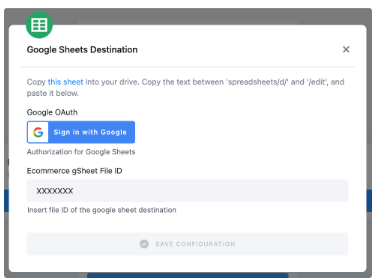

Google Sheets

Authorize your Google account.

Duplicate the sheet into your Google Drive and paste the file ID back to Keboola. It is needed for correct mapping in your duplicated Google sheet.

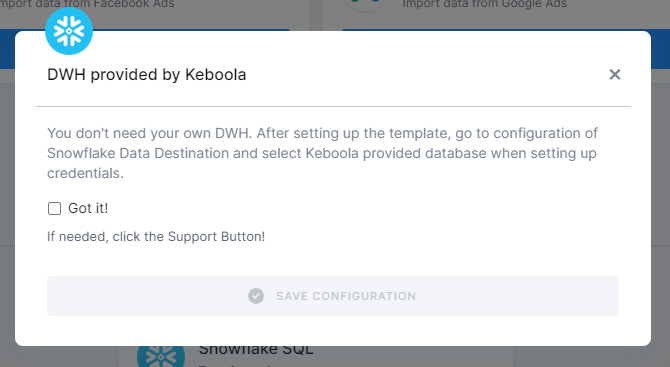

Snowflake Database Provided by Keboola

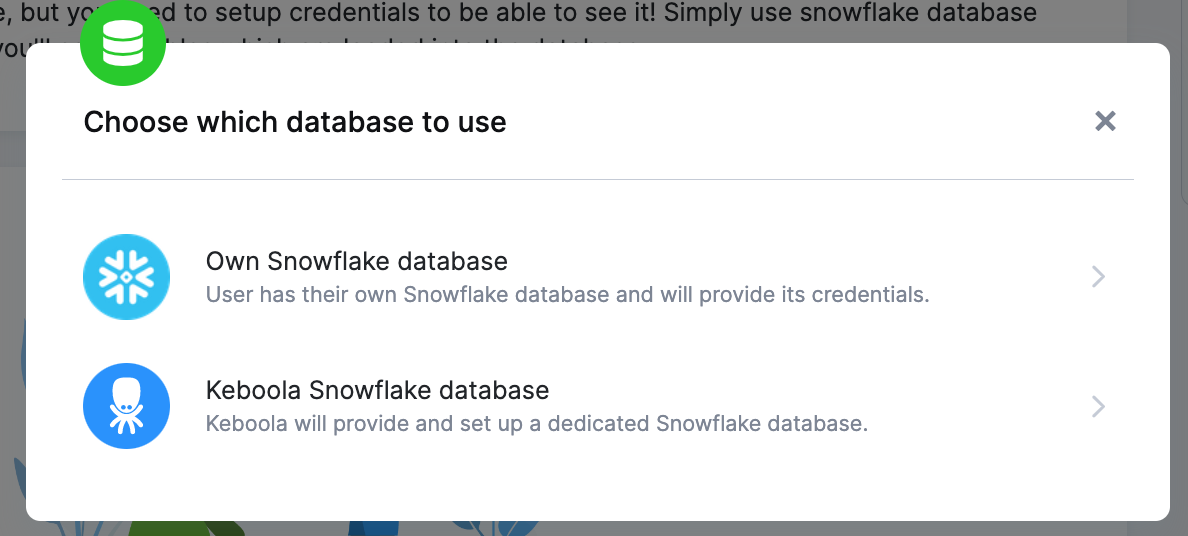

If you do not have your own data warehouse, follow the instructions and we will create a database for you:

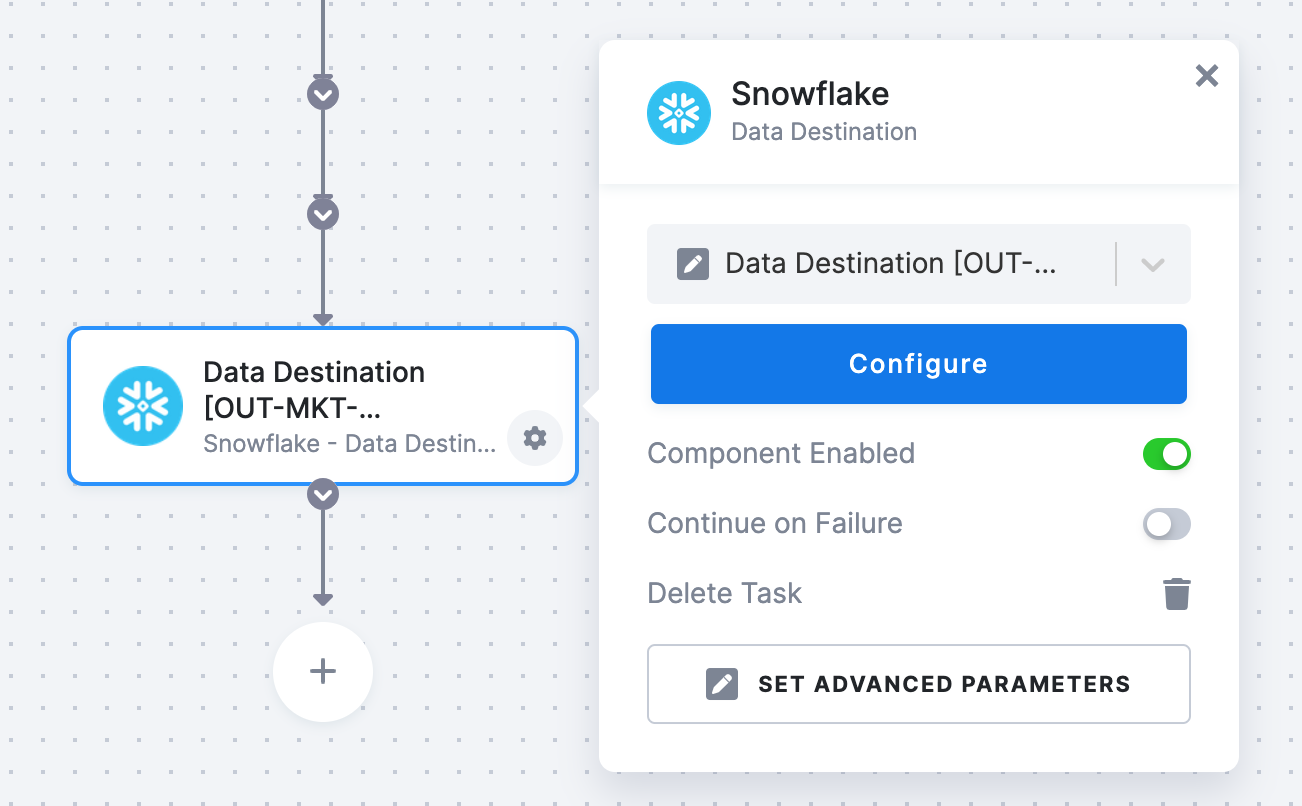

- After clicking Save, the template will be used in your project. You will see a flow.

- Go there and click on Snowflake Data Destination to configure it. You will be redirected to the data destination configuration and asked to set up credentials.

- Select Keboola Snowflake database.

- Then go back to the flow and click Run.

Everything is set up.

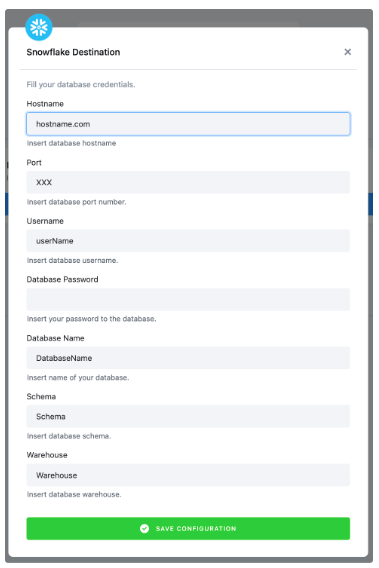

Snowflake Database

If you want to use your own Snowflake database, you must provide the host name (account name), user name, password, database name, schema, and a warehouse.

We highly recommend that you create a dedicated user for the data destination connector in your Snowflake database. Then you must provide the user with access to the Snowflake Warehouse.

Warning: Keep in mind that Snowflake is case sensitive and if identifiers are not quoted, they are converted to upper case. So if you run, for example, a query CREATE SCHEMA john.doe;, you must enter the schema name as DOE in the data destination connector configuration.

More info here.

Most Common Errors

Before turning to the Keboola support team for help, make sure your error is not a common problem that can be solved without our help.

Missing Credentials to Snowflake Database

If you see the error pictured below, you have probably forgotten to set up the Snowflake database.

Click on the highlighted text under Configuration in the top left corner. This will redirect you to the Snowflake Database connector. Now follow the Snowflake Database provided by Keboola on the page Authorizations/destinations.

Then go to the Jobs tab and Run the flow again.