- Home

- Keboola Overview

- Getting Started Tutorial

- Kai - AI Assistant

-

Flows

- Conditional Flows

- Orchestrations

-

Templates

- Advertising Platforms

- AI SMS Campaign

- Customer Relationship Management

- DataHub

- Data Quality

- eCommerce

- eCommerce KPI Dashboard

- Google Analytics 4

- Interactive Keboola Sheets

- Mailchimp

- Media Cashflow

- Project Management

- Repository

- Snowflake Security Checkup

- Social Media Engagement

- Surveys

- UA and GA4 Comparison

- Data Apps

-

Components

-

Data Source Connectors

- Communication

- Databases

- ERP

-

Marketing/Sales

- Adform DSP Reports

- Babelforce

- BigCommerce

- ChartMogul

- Criteo

- Customer IO

- Facebook Ads

- GoodData Reports

- Google Ads

- Google Ad Manager

- Google Analytics (UA, GA4)

- Google Campaign Manager 360

- Google Display & Video 360

- Google My Business

- Linkedin Pages

- Mailchimp

- Market Vision

- Microsoft Advertising (Bing Ads)

- Pinterest Ads

- Pipedrive

- Salesforce

- Shoptet

- Sklik

- TikTok Ads

- Zoho

- Social

- Storage

-

Other

- Airtable

- AWS Cost Usage Reports

- Azure Cost Management

- Ceps

- Dark Sky (Weather)

- DynamoDB Streams

- ECB Currency Rates

- Generic Extractor

- Geocoding Augmentation

- GitHub

- Google Search Console

- Okta

- HiBob

- Mapbox

- Papertrail

- Pingdom

- ServiceNow

- Stripe

- Telemetry Data

- Time Doctor 2

- Weather API

- What3words Augmentation

- YourPass

- Data Destination Connectors

- Applications

- Development Branches

- IP Addresses

-

Data Source Connectors

- Data Catalog

- Storage

- Transformations

- Workspaces

- Management

- AI Features

- External Integrations

Google BigQuery

This data destination connector sends data to a Google BigQuery dataset.

Create Service Account

To access and write to your BigQuery dataset, you need to set up a Google Service Account.

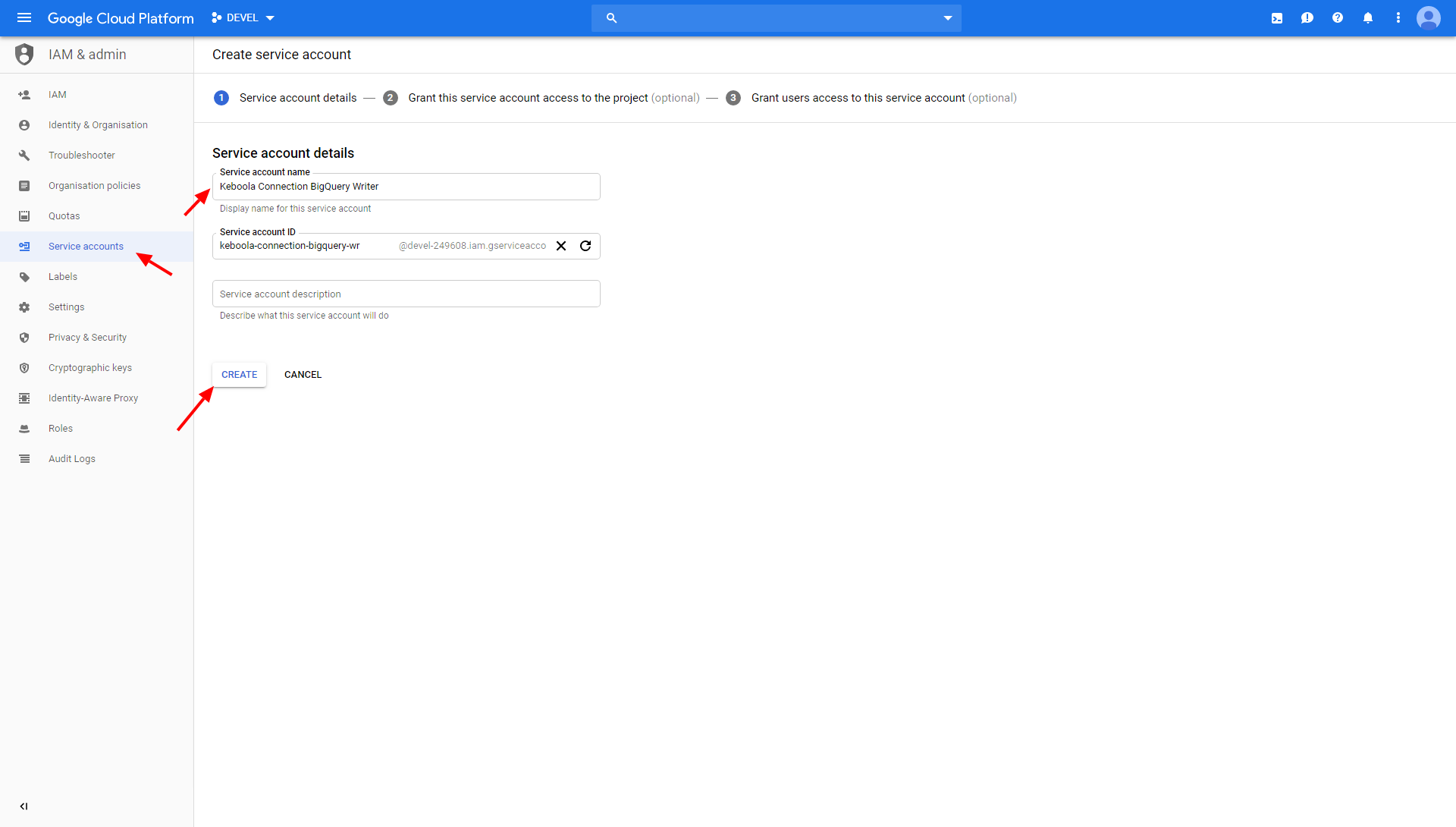

- Go to Google Cloud Platform Console > IAM & admin > Service accounts.

- Select the project you want the data destination connector to have access to.

- Click Create Service Account.

- Select an appropriate Service account name (e.g.,

Keboola BigQuery data source).

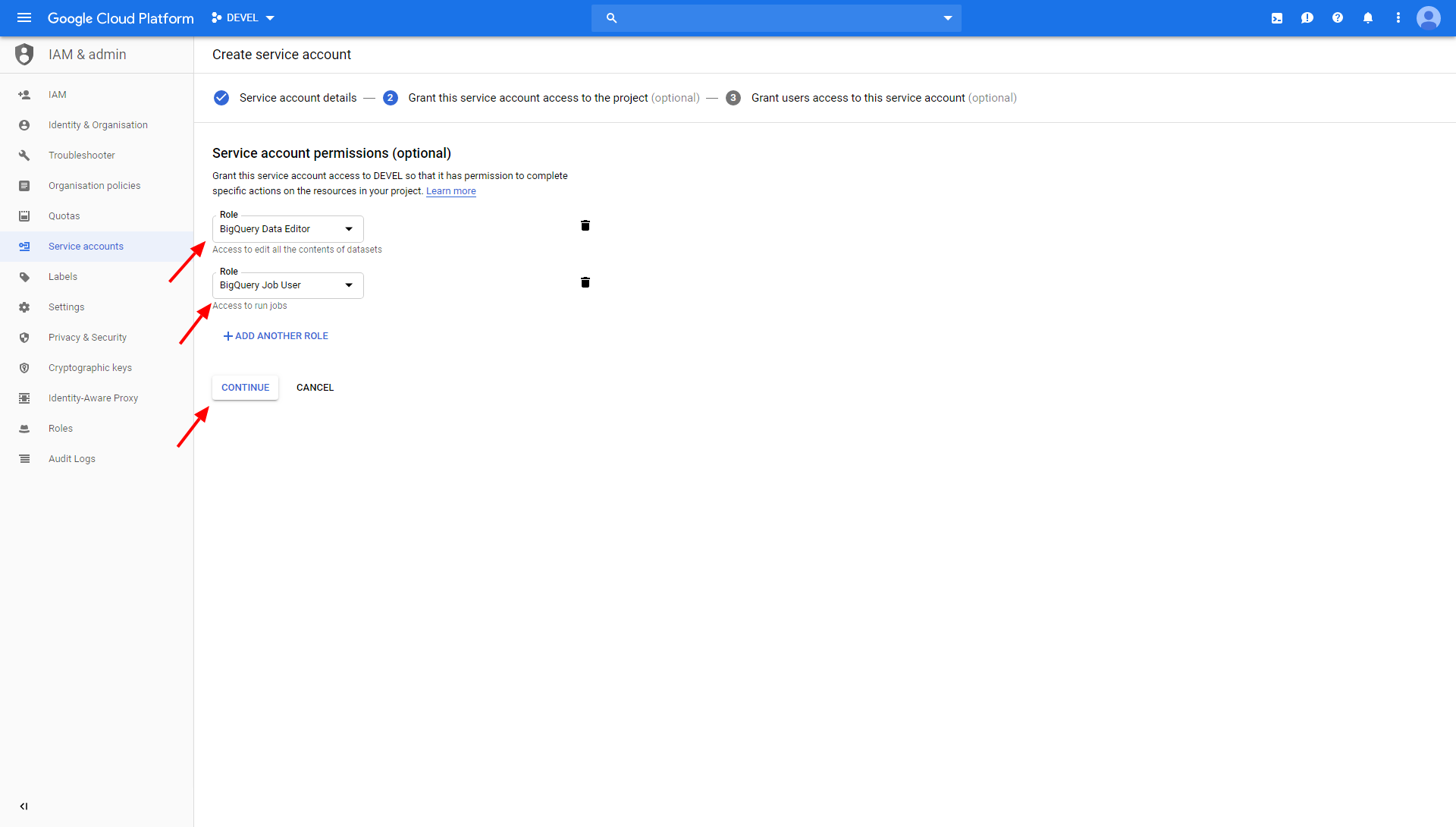

Then add the BigQuery Data Editor and BigQuery Job User roles.

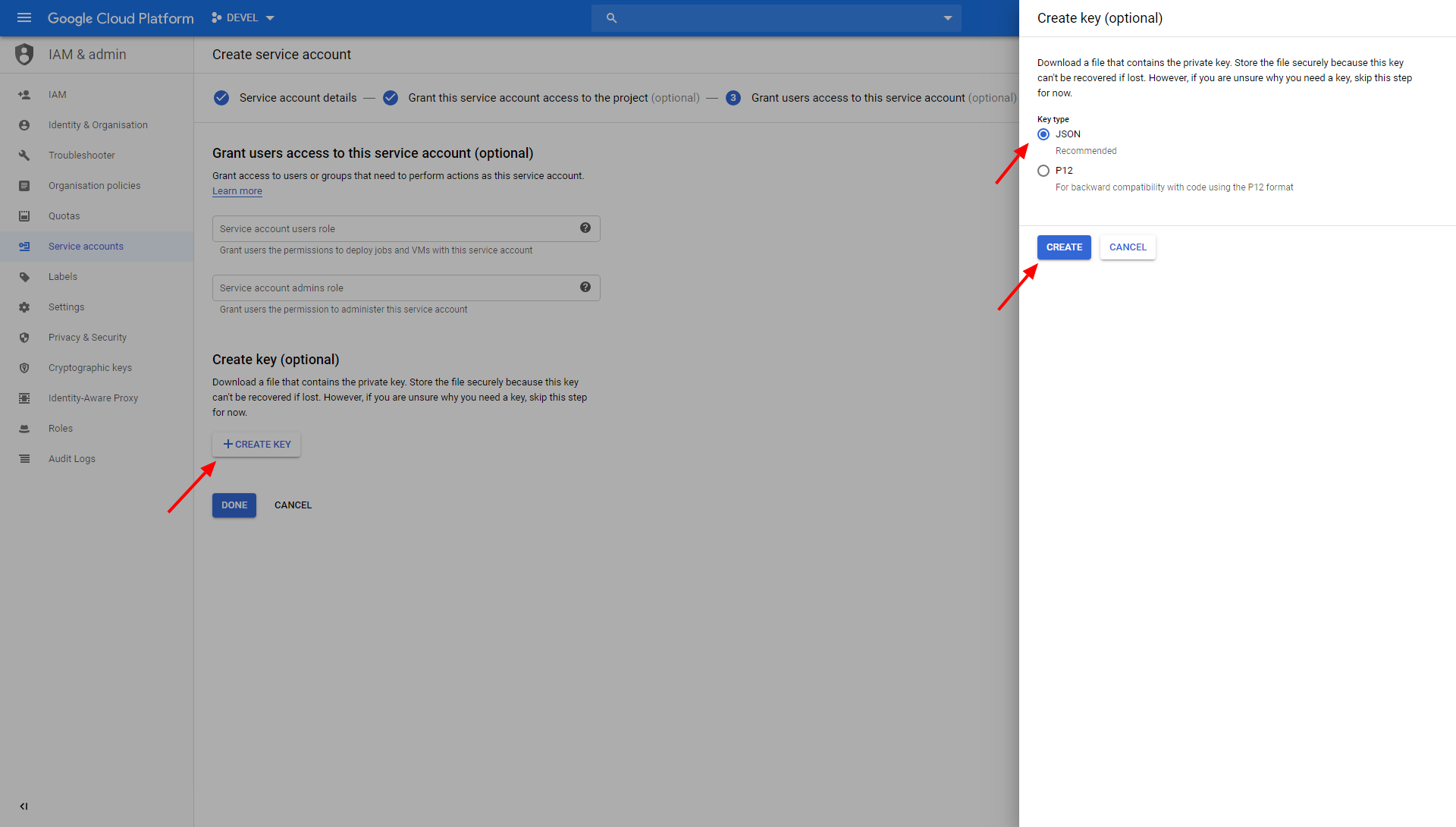

Finally, create a new JSON key (click + Create key) and download it to your computer (click Create).

You can now close the Google Cloud Platform Console and go back to configuring the connector.

Configuration

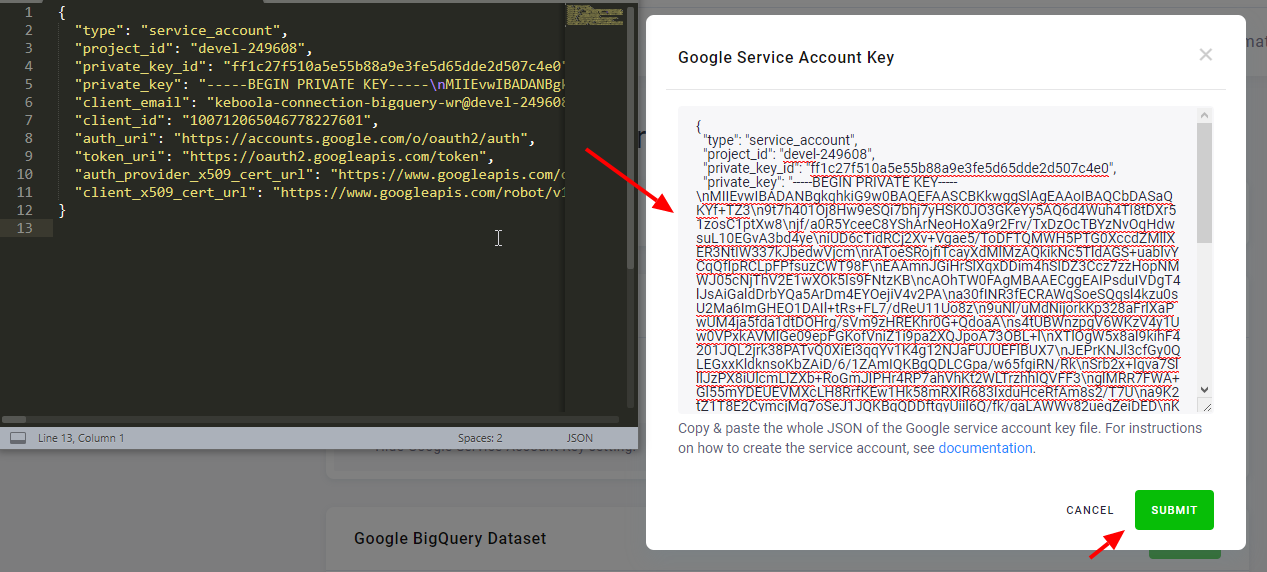

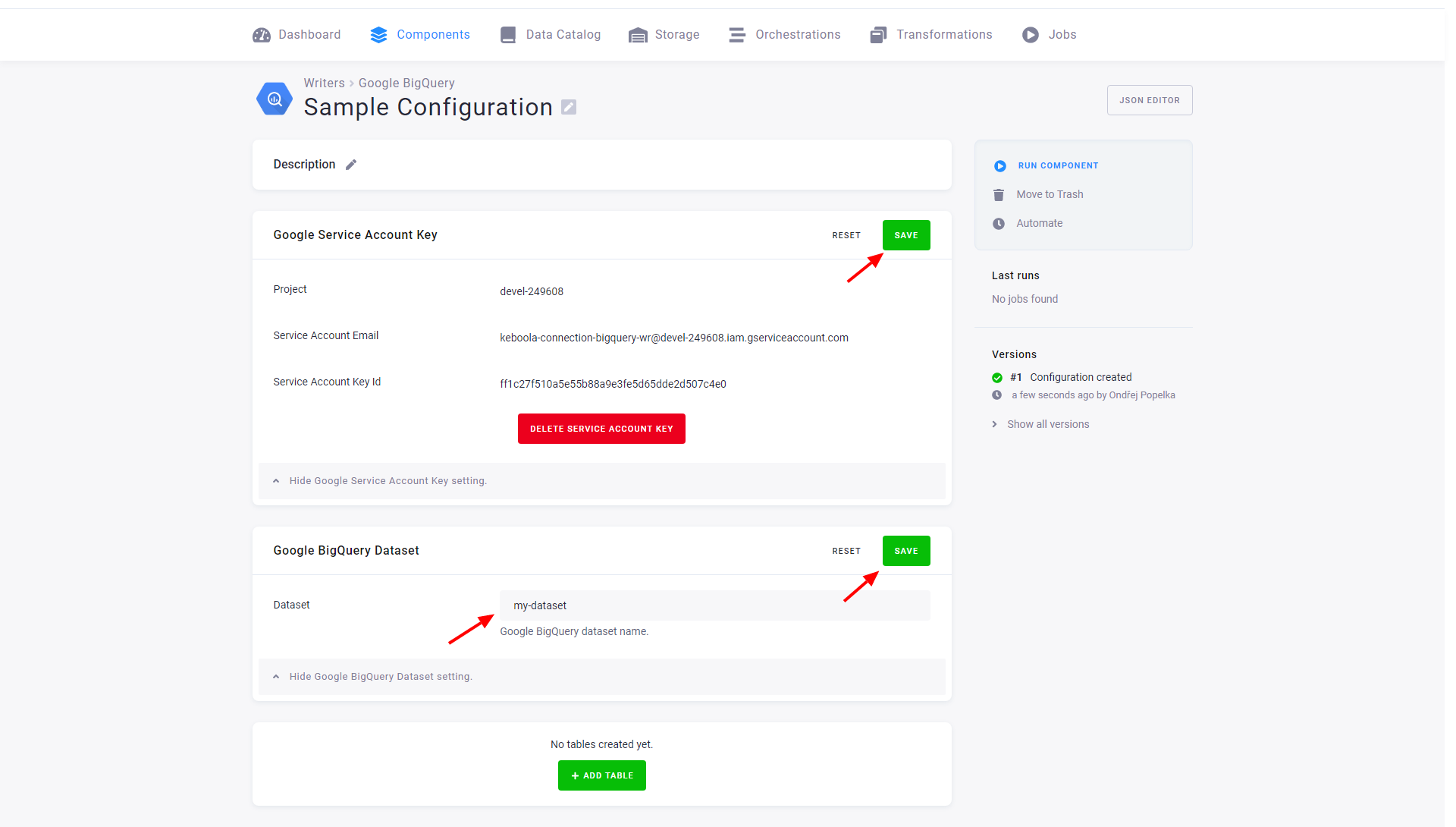

Create a new configuration of the BigQuery connector. Click on the Set Service Account Key button. Open the downloaded key in a text editor, copy & paste it in the input field, click Submit and then Save.

Important: The private key is stored in an encrypted form and only the non-sensitive parts are visible in the UI for your verification. The key can be deleted or replaced by a new one at any time. Don’t forget to Save the credentials.

There is one more thing left to do before you can start adding tables. Specify the Google BigQuery Dataset and Save it.

All tables in this configuration will be written to this dataset. If the dataset does not exist, the roles assigned to the Google Service Account will allow the connector to create it.

Add Tables

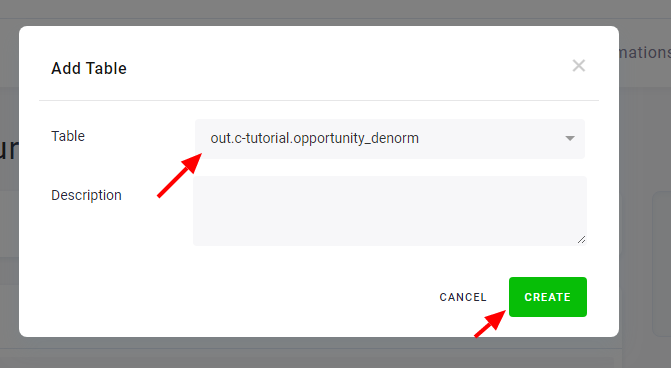

To add a new table to the connector, click Add Table and select the table. The table name will be used to create the destination table name in BigQuery and can be modified.

Configured tables are stored as configuration rows.

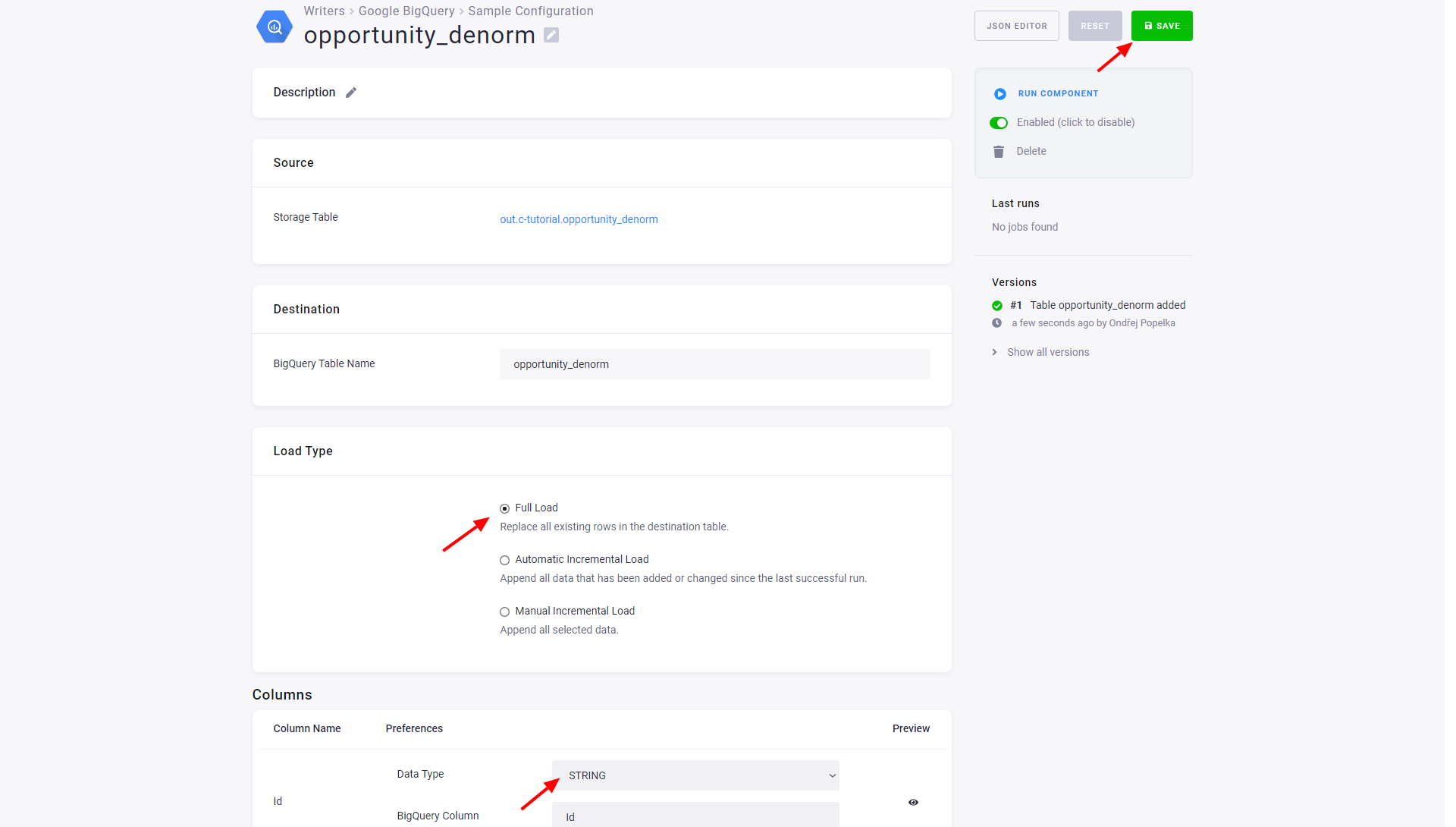

Destination

You can specify the table name in BigQuery and set the load type to Full Load or Incremental.

Note: Incremental load type does not use a primary key to modify existing records, new records will be always appended to the table.

Columns

You can rename the destination column in BigQuery and specify the used data type. The little eye icon on the right will show you a preview of the values so you don’t have to guess the data type.

Note: You have to define a data type on at least one column for the configuration to work.

© 2026 Keboola